The nexus of materialized sound and sonified material

Markus Buehler covers the interface of material and sound, explaining how we can transcend scales in space and time to make the invisible accessible to our senses, and to manipulate them from different vantage points. Starting from the macroscale—things we can see with our eyes—moving to smaller scales and probing the unique features of the nano-world. Learn how sound can be shaped with molecular vibrations, how molecules can be designed with new sound, and how the neural networks of living systems (their brains) can form a medium for translation between different material manifestations.

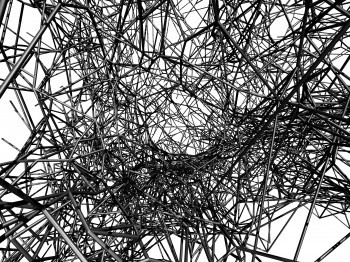

Buehler begins with a brief review of recent work with CAST Visiting Artist Tomás Saraceno, CAST Faculty Director Evan Ziporyn, and others studying three-dimensional spider webs, modeling, and translating those complex structures into a playable musical instrument. The instrument is used to manipulate the natural sounds of spider webs, generating human input that can be fed back to the spider. The spider processes the auditory signals as vibrations of the silk strings and responds by altering its behavior and generation of silk material, reflecting a vision by which sound becomes material.

While spider webs are fascinating, there is more to see at the nano-level, where all things always move. Tiny objects are excited by thermal energy and set in motion to undergo large deformations. Taking advantage of this phenomenon, the frequency spectrum of all known protein structures—more than 110,000—can be computed, translating motion into audible sound. Using AI, these natural soundings of proteins are evolved into new patterns, exploring an interface of human musical expression with learned behavior and how it can be used as a guide to discover new materials and better understand physiology and disease etiology.

Proteins are the most abundant building blocks of all living things, and their motion, structure, and failure in the context of disease is a foundational question that transcends many academic disciplines. The structure of the very protein materials used to build our bones, skin, organs, and brains, finds representation in the various creative expressions humans have projected over tens of thousands of years, in that our bodies—how they function in a healthy state but also how they fail in disease—are reflected in all expressions of art. With this form of microscope, we can begin to see the world within us and exploit it for new engineering designs.

The translation from various hierarchical systems into one another poses a powerful paradigm to understand the emergence of properties in materials, sound, and related systems, and offers new design methods to materialize what we hear and help us understand how the materials build us up.

Markus J. Buehler

Jerry McAfee (1940) Professor

Markus J. Buehler is the McAfee Professor of Engineering at MIT, Head of the Department of Civil and Environmental Engineering (CEE), and leads the Laboratory for Atomistic and Molecular Mechanics (LAMM).

His primary research interests focus on the structure and mechanical properties of biological and bio-inspired materials, to characterize, model, and create materials with architectural features from the nano- to the macro-scale.